Few-Shot Machine Learning Explained: Examples, Applications, Research

Few-Shot Machine Learning Explained: Examples, Applications, Research

- Last Updated: December 2, 2024

MobiDev

- Last Updated: December 2, 2024

Data is what powers machine learning solutions. Quality datasets enable training models with the needed detection and classification accuracy, though sometimes the accumulation of sufficient and applicable training data that should be fed into the model is a complex challenge. For instance, to create data-intensive apps human annotators are required to label a huge number of samples, which results in complexity of management and high costs for businesses. In addition to that, there is the difficulty associated with data acquisition related to safety regulations, privacy, or ethical concerns.

When we have a limited dataset including only a finite number of samples per class, few-shot learning may be useful. This model training approach helps make use of small datasets and achieve acceptable levels of accuracy even when the data is fairly scarce.

Few-shot machine learning is useful in applications where privacy concerns or a simple lack of quality representative images are problems. It helps achieve accuracy even with scarce data to draw on.

This article will explain what few-shot learning is, how it works under the hood, and share with you the results of research on how to get optimum results from a few-shot learning model using a relatively simple approach for image classification tasks.

What is Few-Shot Learning?

The starting point of machine learning app development is a dataset; the more data, the better the end result. Through obtaining a large amount of data, the model becomes more accurate in predictions. However, in the case of few-shot learning (FSL), we attempt to reach almost the same accuracy with fewer data points. This approach eliminates the normally high model-training costs that are needed to collect and label data. Also, by the application of FSL, we obtain low dimensionality in the data and cut computational costs.

Note that FSL may also be referred to as low-shot learning (LSL), and in some sources, this term is applied to ML problems where the volume of the training dataset is limited.

- FSL or LSL problems can be related to business challenges. It could be object recognition, image classification, character recognition, NLP, or other tasks. The machines are required to perform these tasks and navigate use cases through the application of FSL techniques with a small amount of training data. A notable example of FSL is demonstrated in the robotics field. In this example, robots learn how to move and navigate after getting acquainted with only a few sample scenarios.

- If we were to use only one scenario to train the robot, this would be known as a one-shot ML problem. In specific scenarios, for example when not all classes are labeled in the training, it could happen that there exist zero training scenarios for particular situations. This occurence is termed a zero-shot ML problem.

Approaches of Few-Shot Learning

To tackle few-shot and one-shot machine learning problems, we can apply one of two approaches.

Data-Level Approach

If there is a lack of data to fit the algorithm while avoiding overfitting or underfitting of the model, then additional data is supposed to be added to complement the existing data. This strategy lies at the core of the data-level approach. The external sources of various data determine whether its implementation is successful. For instance, solving the image classification task without sufficient labeled elements for different categories requires a classifier. To build a classifier, we may apply external data sources with similar images, even though these images are unlabeled and integrated in only a semi-supervised manner.

To complement the approach, besides external data sources, we can use techniques that attempt to produce novel data samples from the same distribution as the training data. For example, random noise added to the original sample images results in generating new data.

Alternatively, new image samples can be synthesized using the generative adversarial network (GANs) technology. For example, with this technology, new images are produced from different perspectives if there are enough examples available in the training set.

Parameter-Level Approach

The parameter-level approach involves meta-learning — a technique that teaches the model to understand which features are crucial to perform machine learning tasks. This can be achieved by developing a strategy for controlling how the model’s parameter space is exploited.

Limiting the parameter space helps to solve challenges related to overfitting. The basic algorithm generalizes a certain amount of training samples. Another method for enhancing the algorithm is to direct it to the extensive parameter space.

The main task is to teach the algorithm to choose the most optimal path in the parameter space and give targeted predictions. In some sources, this technique is referred to as meta-learning.

Meta-learning employs a teacher model and a student model. The teacher model sorts out the encapsulation of the parameter space. The student model should become proficient in how to classify the training examples using data from the teacher model. Output obtained from the teacher model serves as a base for the student’s model training.

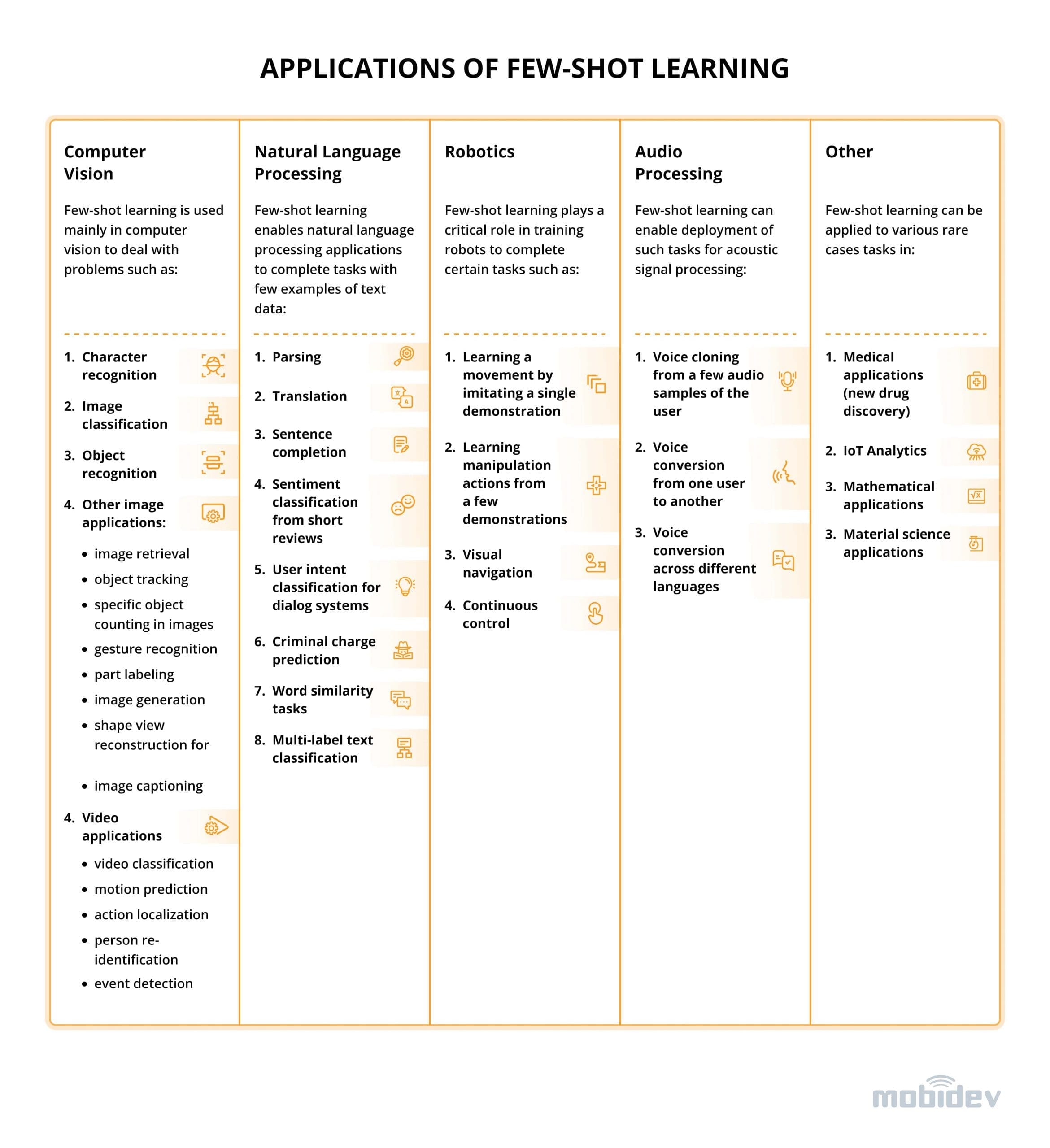

Applications of Few-Shot Learning

Few-shot learning forms the backbone algorithm for applications in the most popular fields, namely:

- Computer Vision

- Natural Language Processing

- Robotics

- Audio processing

- Healthcare

- IoT

In computer vision, FSL performs the tasks of object and character recognition, image and video classification, scene location, etc. The NLP area benefits from the algorithm by applying it to translation, text classification, sentiment analysis, and even criminal charge prediction. A typical case provides for character generation as machines parse and create handwritten characters after a short training on a few examples. Other use cases include object tracking, gesture recognition, image captioning, and visual question answering.

Few-shot learning assists in training robots to imitate movements and navigate. In audio processing, FSL is capable of creating models that clone voice and convert it across various languages and users.

A remarkable example of a few-shot learning application is drug discovery. In this case, the model is being trained to research new molecules and detect useful ones that can be added to new drugs. New molecules that haven’t gone through clinical trials can be toxic or have low activation rates, so it’s crucial to train the model using a small number of samples.

Few-shot learning applications. Source: MobiDev

Let’s take a deeper dive into how few-shot learning works and overview the results of MobiDev’s research.

Research: Few-Shot Learning For Image Classification

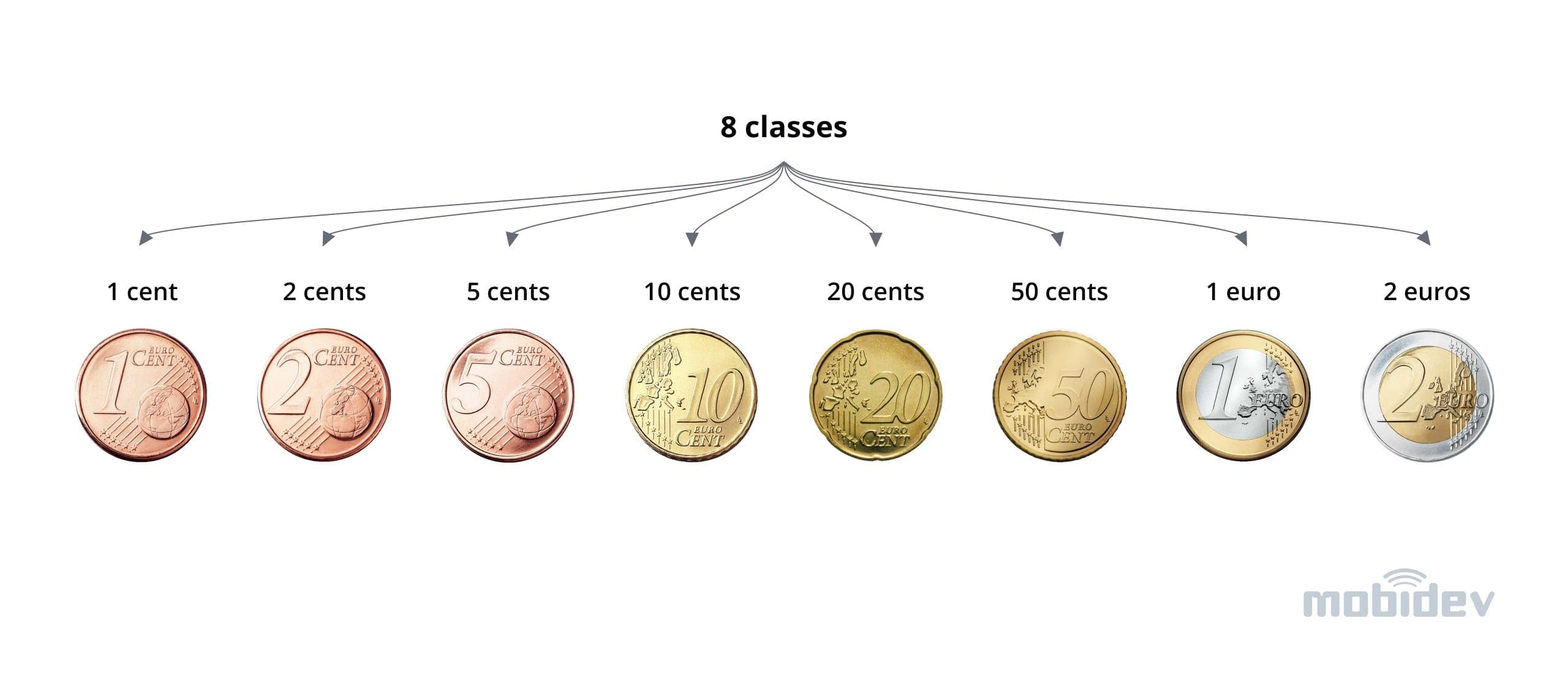

We selected a sample problem of image classification. Our model has to determine the category the image belongs to for the purposes of identifying the denomination of a coin. This could be used to quickly count the total sum of coins lying on a table by splitting the larger image into smaller ones containing individual coins and classifying each small image.

Data Sets

First of all, we needed a few-shot learning dataset to experiment with. To that end, we collected a dataset of euro coin images from public sources, with 8 classes according to the number of coin denominations.

Image classes with examples. Source: MobiDev

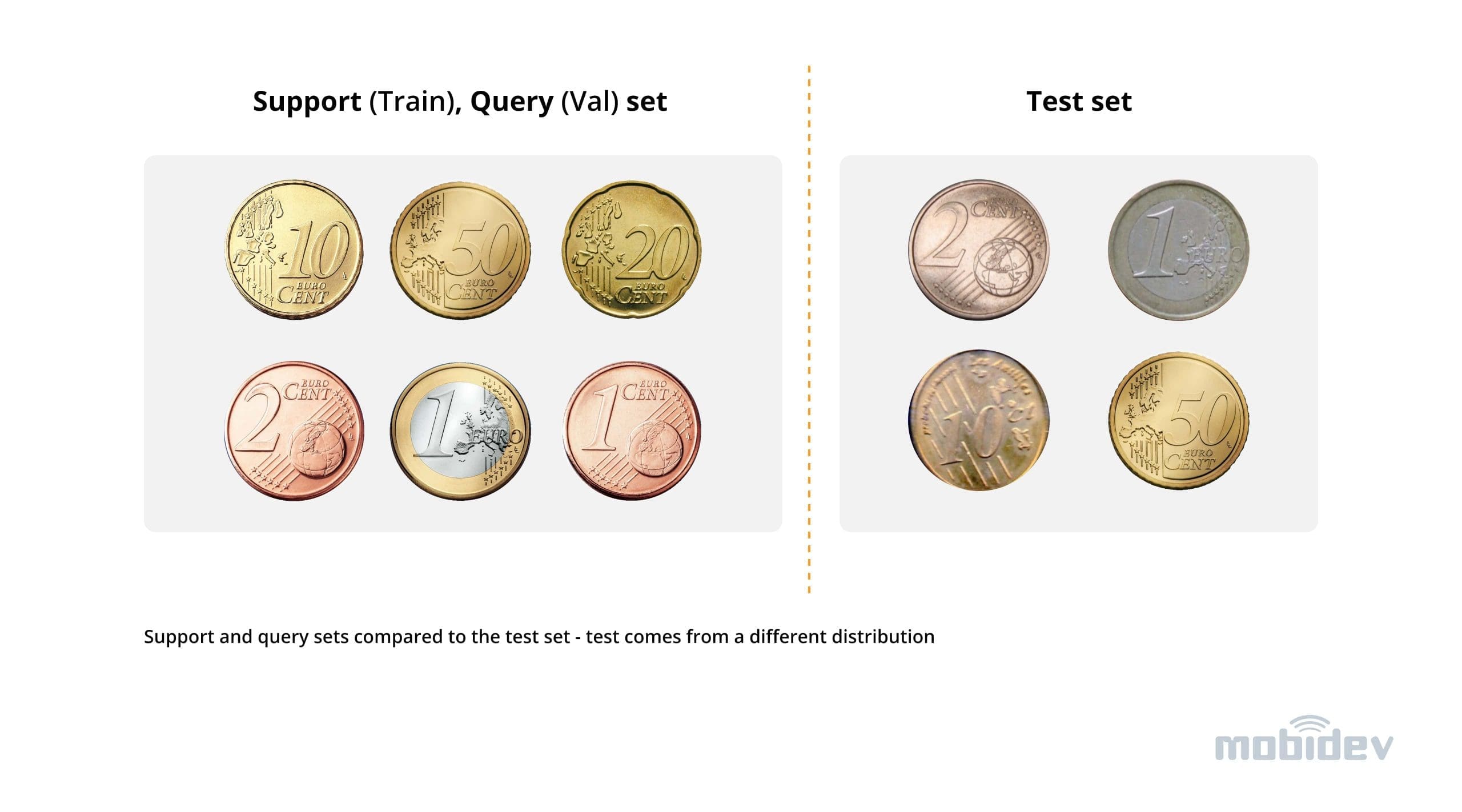

The data was split into 3 subsets – namely the support (train), query (val), and test sets. Support and query datasets were selected to be from one distribution whereas the test dataset was purposefully chosen to come from a different one — images in the test are over/underexposed, tilted, show coins with unusual colors, contain blur, etc.

This data preparation was done to simulate the production setting where the model is often trained and validated on good quality data from open datasets/internet scraping, whereas the samples from real operation conditions are usually different: users can capture images with different devices, have poor lighting conditions, and so on. A robust CV solution should be able to tackle these problems to a certain extent, and our goal is to figure out how this will work out for a few-shot learning approach, which presupposes that we do not have much support data to work with.

Source: MobiDev

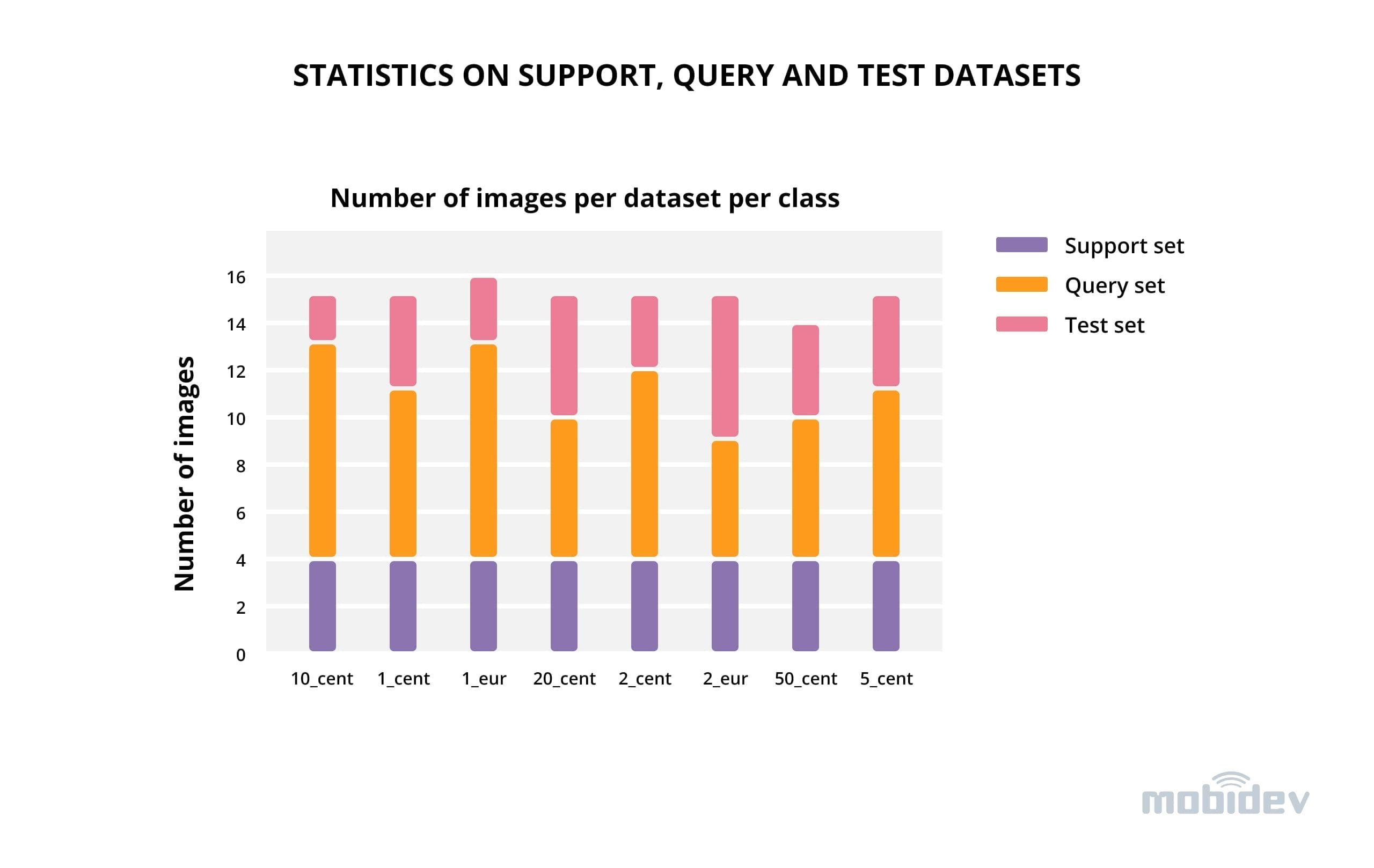

Finally, the statistics on the number of available data samples can be seen below. We managed to collect 121 data samples, ~15 images per coin denomination. The support set is balanced, each class has an equal amount of samples with up to 4 images per class for few-shot training, while the query and test sets are slightly imbalanced and contain approx. 7 and 4 images per class respectively. The distribution of samples per set is as follows:

- Support: 32

- Query: 57

- Test: 31

Statistics on support, query, and test datasets. Source: MobiDev

Selected Approach

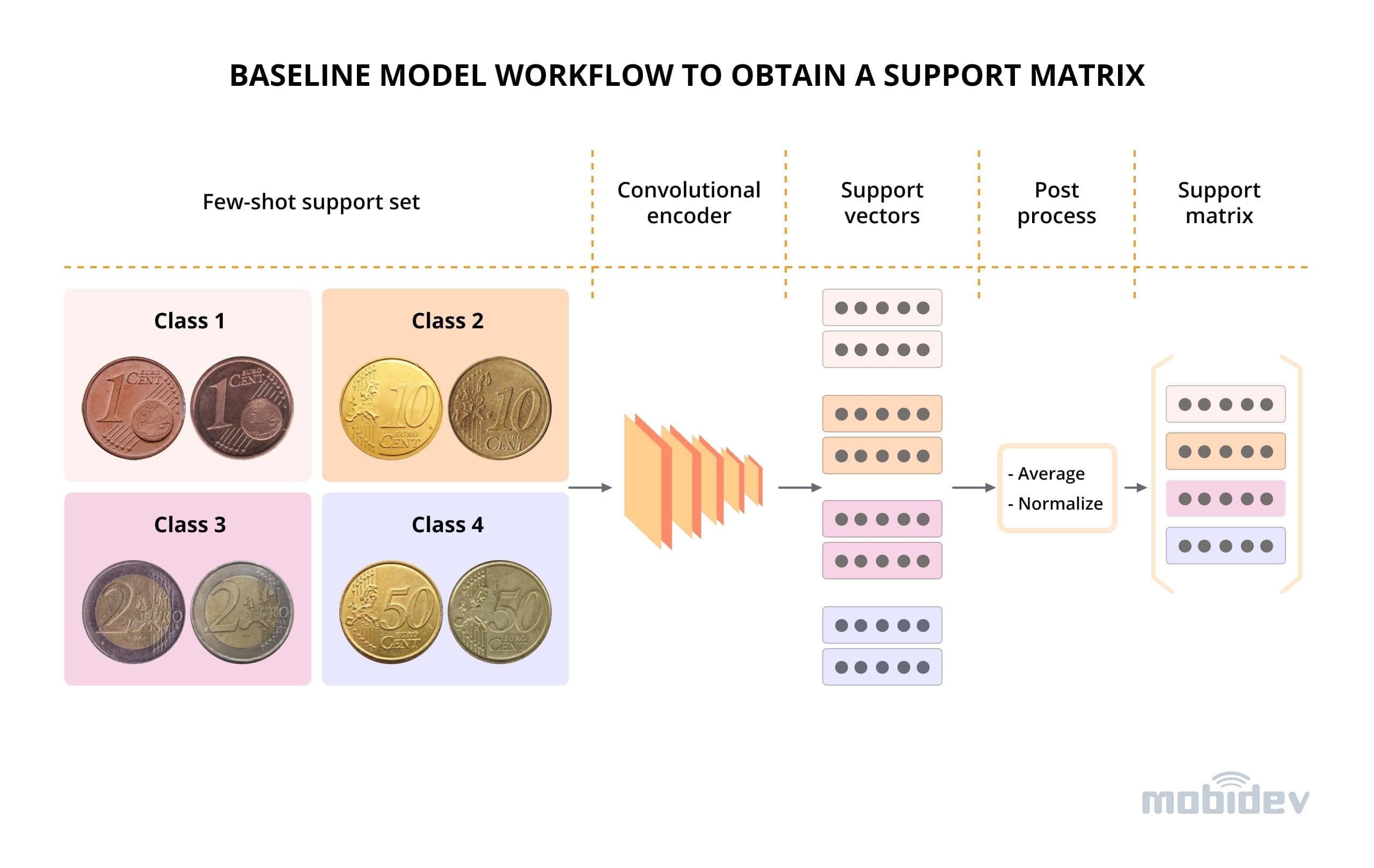

In the selected approach, we use a pre-trained Convolutional Neural Network (CNN) as an encoder to obtain compressed image representations. The model is able to produce meaningful image representations that reflect the content of the images since it has seen a large dataset and was pre-trained on ImageNet prior to few-shot learning. These image representations can then be used in few-shot learning and classification.

Baseline model workflow to obtain a support matrix. Source: MobiDev

Multiple steps can be taken to improve the performance of a few-shot learning algorithm:

- Baselining

- Fine-tuning

- Fine-tuning with Entropy Regularization

- Adam optimizer

To learn more about each step, a full PDF version of the research can be found on MobiDev's website.

Experiment Results

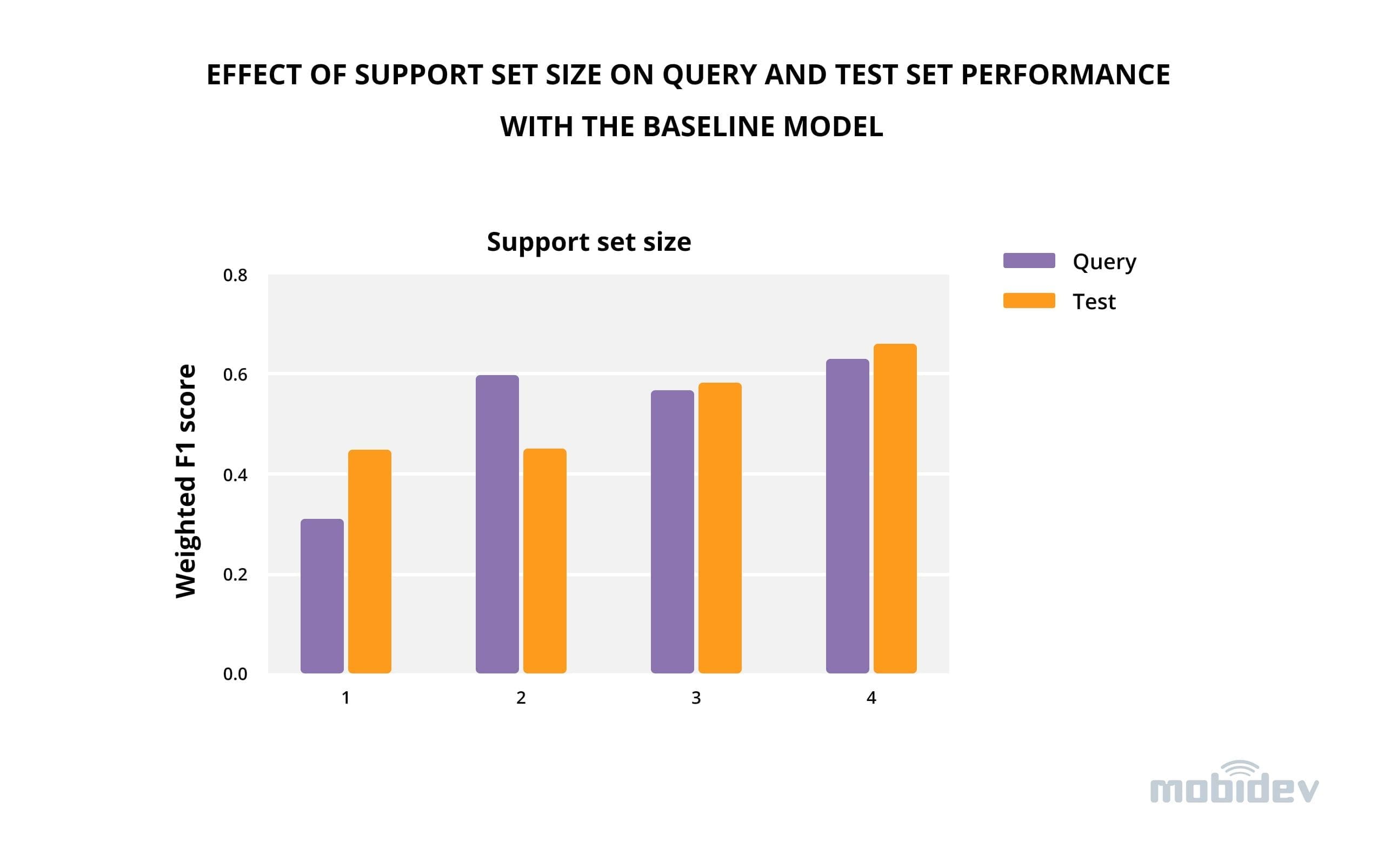

With the selected model configuration, we ran several experiments on the collected data. First of all, we used a baseline model without any fine-tuning or additional regularization. Even in this default setting the results were surprisingly decent as illustrated in the table below: 0.66 / 0.62 weighted F1 scores for query/test sets, respectively. (We chose a weighted F1 metric as it takes into account imbalance in class sizes as well as combining both precision and recall scores.)

At this stage, we experimented with the size of the support set. As expected, larger support set results in better query and test performance, although the benefits of using more samples reduce as the train set size increases.

Interestingly, with small support set sizes (1-3) there are random shifts in query vs test set performances (one can improve while the other decreases), however, at support set size 4, there is an improvement for both of the sets.

Effect of support set size on query and test set performance with the baseline model. Source: MobiDev

Moving on from the baseline model, fine-tuning with the application of the Adam optimizer on mini-batches from the support set improves the scores by 2 percent for both query and test sets. This result is further enhanced by 1-4 percent when adding entropy regularization on the query set into the fine-tuning pipeline.

It is important to point out that accuracy improves alongside the weighted F1 score, meaning that the model performs better not only class-wise but also in relation to the total amount of data. The final accuracy of 0.7 and 0.68 for query and test sets means the model that had only 4 image examples for training could successfully guess the class of the image in 40/57 examples for the query set and 21/31 examples for the test set.

| Run settings | Accuracy | Weighted F1 score | ||

|---|---|---|---|---|

| Query set | Test set | Query set | Test set | |

| Baseline | 0.67 | 0.61 | 0.66 | 0.62 |

| Baseline + fine tuning | 0.68 | 0.65 | 0.68 | 0.64 |

| Baseline + fine tuning + regularization | 0.7 | 0.68 | 0.69 | 0.69 |

Few-shot metrics on val/test datasets.

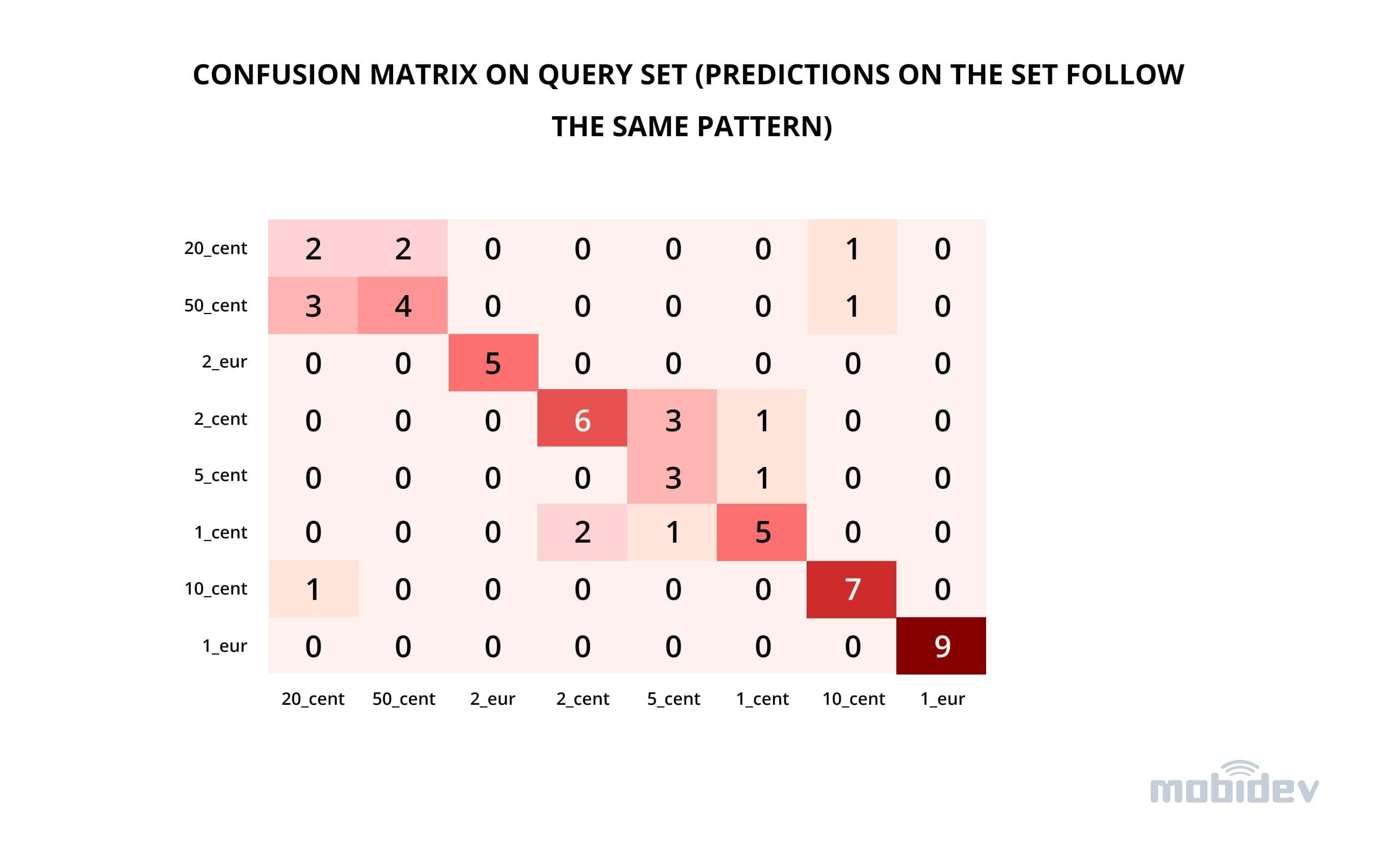

Looking at the confusion matrix on the query set, we can spot some interesting tendencies. Firstly, the easiest for the model categories to guess were the highly-valued coins representing 1 and 2 euro because these coins have two distinct parts delineated by color: inner and outer. These two parts immediately make them distinctive from single-material, smaller-value coins.

In addition, in 1 euro the inner part is made of grey copper-nickel alloy, while the outer part is composed of golden nickel-brass alloy. In 2 euro coins, the alloys are reversed: copper-nickel for the outer part and nickel-brass for the inner part. This distinction makes it easier to differentiate one coin from another.

Two difficult-to-discern differences were between 20 and 50 cent coins, and between 5 and 2 cent coins, as the model often confused these pairs of coins between each other. This was because of the more subtle difference between these categories: the color, the shape of the coins, and even the textual inscriptions are the same. The only distinction is the actual denominational inscription of the coin. Since the model was not specifically trained on coins, it does not know which areas of the coins it should be paying the most attention to, and therefore can undervalue the importance of denominal value inscriptions.

Wrapping Up

In the demanding world of practical Machine Learning, there is always a balance between the accuracy of the developed solutions and the amount of work and data the solution requires. Sometimes, it is preferable to quickly create a solution that meets only minimum accuracy requirements, deploy it in production, and iterate from there. In this scenario, the approach described in this article could work well. We found that for a problem of a few-shot classification of coins by image, it is possible to reach ~70% accuracy given as few as 4 image examples per coin denomination.

The problem is quite difficult for a model pre-trained on a broad set of data (ImageNet dataset) as the model does not know which specific part of a coin it should focus on to make an accurate prediction, and we expect the accuracy could be even higher given a problem where distinctive features would be more evenly distributed across the image (e.g. fruit type and quality classification).

The Most Comprehensive IoT Newsletter for Enterprises

Showcasing the highest-quality content, resources, news, and insights from the world of the Internet of Things. Subscribe to remain informed and up-to-date.

New Podcast Episode

What is Software-Defined Connectivity?

Related Articles