Mirai Botnet Army, Case Against Standardization in IoT, and Equality in Machine Learning

Mirai Botnet Army, Case Against Standardization in IoT, and Equality in Machine Learning

- Last Updated: December 2, 2024

Yitaek Hwang

- Last Updated: December 2, 2024

Mirai IoT Botnet

On Friday, a massive DDoS attack aimed at Dyn occurred, causing trouble for Twitter, Amazon, GitHub, and Netflix to name a few. According to the security firm Flashpoint, part of these attacks involved the malware Mirai, which hacks vulnerable IoT devices with weak security measures (e.g. factory default settings). KrebsOnSecurity reported that DVRs and IP cameras made by XiongMai Technologies were responsible for these attacks. This industry wide attack highlights the growing concern over security of IoT devices.

Summary:

- Mirai looks through the Internet for IoT devices with weak security settings and launches DDoS attacks at an online target until its system cannot handle such large requests.

- Many inexpensive IoT devices are specifically vulnerable to these malwares, and the Mirai-based botnet will continue to be a problem unless they are unplugged from the Internet.

- Specifically, IoT products have passwords hardcoded into the firmware, allowing hackers to connect via Telnet or SSH, leaving the end user unable to disable or deny such vicious connections.

Takeaway: One day after Dyn posted “Recent IoT-based Attacks: What Is the Impact On Managed DNS Operators?” it fell victim to the Mirai-based botnet attack. Hopefully, this incident propels an industry-wise effort to remove vulnerable devices, patch up security settings, and provide more instructions for customers to protect their devices. If you own an IoT device, check out this list of potentially vulnerable devices and take the appropriate measures to protect the Internet.

+ Periscope: DDoS DNS attack explained by Dale Drew at Level 3

A Case Against Standardization

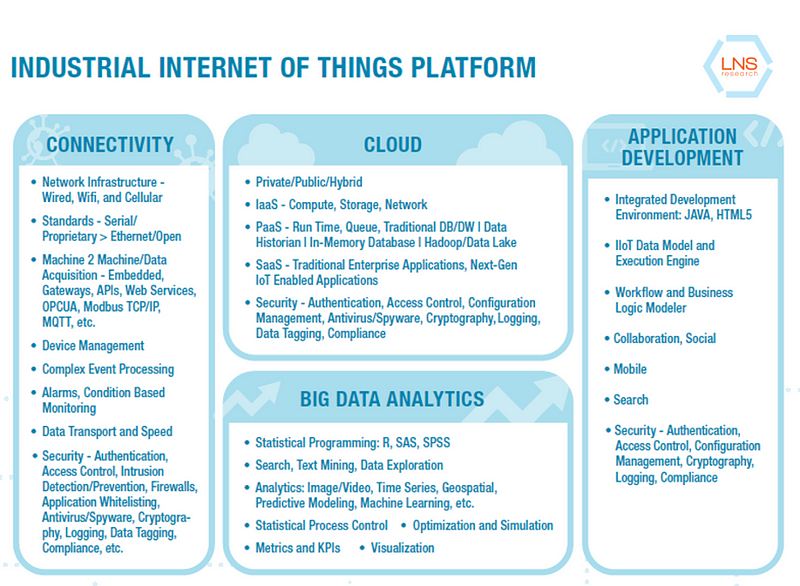

IoT One released a statement this week, making a case against standardization in industrial IoT. This is an interesting point since just about everyone agrees that standardization is one of the barriers to IoT adoption. But IoT One argues that standardization might limit innovation. Is unconstrained innovation a reason to leave industrial IoT without a standard?

Summary:

- Standardization brings three main benefits: 1) reduced time to market, 2) simplified security, 3) simplified cross-platform communication.

- Stephen Mraz of Machine Design, however, believes that a lack of standards is helping drive unconstrained innovation. Also, who will be responsible for making standards in industrial IoT and what effect will those organizations have over smaller, innovative players?

Takeaway: As seen in the LNS Research diagram, there is a lot of communication protocols to standardize. Considering what happened with Mirai on Friday, there is a strong case to be made for standardization to help with security issues alone. With the merger of AllSeen Alliance and Open Connectivity, the industry seems to be pointing towards standardization. Although I agree with the team at IoT One and Stephen Mraz to a certain degree, I believe the rate of innovation will be unhindered by standardization and standardization will accelerate growth.

Quote of the Week

At the heart of our approach is the idea that individuals who qualify for a desirable outcome should have an equal chance of being correctly classified for this outcome. In our fictional loan example, it means the rate of ‘low risk’ predictions among people who actually pay back their loan should not depend on a sensitive attribute like race or gender. We call this principle equality of opportunity in supervised learning.

- Moritz Hardt: “Equality of Opportunity in Machine Learning”

The idea of garbage in, garbage out applies to machine learning as well. This means that unintentional prejudice left unprocessed in the dataset can lead to unjust outcomes. The Google Brain Team released a paper on Equality of Opportunity in Machine Learning early this month to start up a conversation on preventing discrimination based on sensitive attributes (e.g. race, gender, disability, or religion).

The approach by Hardt et al. reveals possible prejudice in the dataset and helps the decision maker to adjust the algorithm to weigh the effects of classification accuracy and non-discrimination. You can see a visualization of this tradeoff or read the paper submitted to the Conference on Neural Information Processing Systems in Barcelona.

The Rundown

- Deep Learning Papers Reading Roadmap — GitHub

- European ML Landscape — Medium

- Preparing for the Future of AI — WhiteHouse

- 10 Modern Software Over-engineering Mistakes — Medium

- Bayesian Hierarchical Modeling using Baseball — VarianceExplained

Resources

- Numerai: Hedge fund builts by anonymous data scientists

- VirtualForest.io: Build your own forest in VR

- Noms: Decentralized database based on git

- Dataquest.io: NumPy tutorial

- MarI/O: Machine Learning for Mario

The Most Comprehensive IoT Newsletter for Enterprises

Showcasing the highest-quality content, resources, news, and insights from the world of the Internet of Things. Subscribe to remain informed and up-to-date.

New Podcast Episode

What is Software-Defined Connectivity?

Related Articles