How To Implement Object Recognition on Live Stream

How To Implement Object Recognition on Live Stream

- Last Updated: December 2, 2024

DataArt

- Last Updated: December 2, 2024

Introduction

Image recognition is very widely used in machine learning. There are many different approaches and solutions to it, but none of them fitted our needs. We needed a completely local solution running on a tiny computer to deliver the recognition results to a cloud service. This article describes our approach to building an object recognition solution with TensorFlow.

YOLO

YOLO is a state-of-the-art real-time object detection system. On the official site you can find SSD300, SSD500, YOLOv2, and Tiny YOLO that have been trained on two different datasets VOC 2007+2012 and COCO trainval. Also you can find more variations of configurations and training datasets across the internet e.g. YOLO9k.

Due to the wide range of available variants it makes it possible to select the version most suited to your needs. For example, Tiny YOLO is the smallest variant that can work fast even on smartphones or Raspberry Pi. We liked this variant and used it in our project.

DarkNet and TensorFlow

The YOLO model was developed for the DarkNet framework. This framework had some inconvenient aspects for us. It stores trained data (weights) in a format that can be recognized in different ways on different platforms. This issue can be a stumbling block because usually you would want to train model on “fast and furious” hardware and then use it anywhere else.

DarkNet is written in C and doesn’t have any other programming interface, so if you need to use another programming language due to platform specifications or your own preferences, you need to do more work to integrate it. Also, it is only distributed in source code format and the compiling process on some platforms may be painful.

On the other hand, we have TensorFlow, a handy and flexible computing system. It’s transferable and can be used on most platforms. TensorFlow provides an API for Python, C++, Java, Go and other community supported programming languages. The framework with default configuration can be installed with one click, but if you need more (e.g. specific processor instructions support) it can be easily compiled from source with hardware autodetection.

Running TensorFlow on a GPU is also pretty simple. All you need is NVIDIA CUDA and tensorflow-gpu, a special package with GPU support. The great advantage of TensorFlow is its scalability. It can use multiple GPUs to increase performance as well as clustering for distributed computing.

We decide to take the best of both worlds and adapt the YOLO model for TensorFlow.

Adaptive YOLO for TensorFlow

So our task was to transfer the YOLO model to TensorFlow. We wanted to avoid any third-party dependencies and use YOLO directly with TensorFlow.

At first we needed to port the model definition. The only way to do this is to repeat the model definition layer-by-layer. Luckily for us, there are many open source converters that can do this. For our purposes the most suitable solution is DarkFlow. It runs DarkNet inside but we don’t really need that part.

Instead we added a simple function to DarkFlow that allowed us to save TensorFlow checkpoints with a meta-graph, the gist of which can be found here. This can be done manually, but if you want to try different models it’s way easier to convert it rather than repeat it manually.

The YOLO model that we chose has a strict input size: 608x608 pixels. We needed some sort of interface that can take an image, normalize it, and feed it into a neural network. So we wrote one. It uses TensorFlow for normalization because it works way faster than other solutions we tried (native Python, numpy, openCV).

[bctt tweet="The biggest advantage of the YOLO model is how it got its name - You Only Look Once." username="iotforall"]

The last layer of the YOLO model returns features that must then be processed into something human readable. We added some operations after the last layer to obtain bounding boxes.

Eventually we wrote a Python module that can restore a model from a file, normalize the input data, and post-process the features from the model to get the bounding boxes for the predicted classes.

Teaching the Model

We decided to use a pre-trained model for our purposes. Trained data is available on the official YOLO site. Our next task was to import the DarkNet weights into TensorFlow. This was done as follows:

- Read the layer info from the DarkNet configuration file

- Read the trained data from the DarkNet weights file according to the layer definition

- Prepare the TensorFlow layer based on the DarkNet layer info

- Add biases to the new layer

- Repeat for each layer

We used DarkFlow to achieve this.

Model Architecture and Data Flow

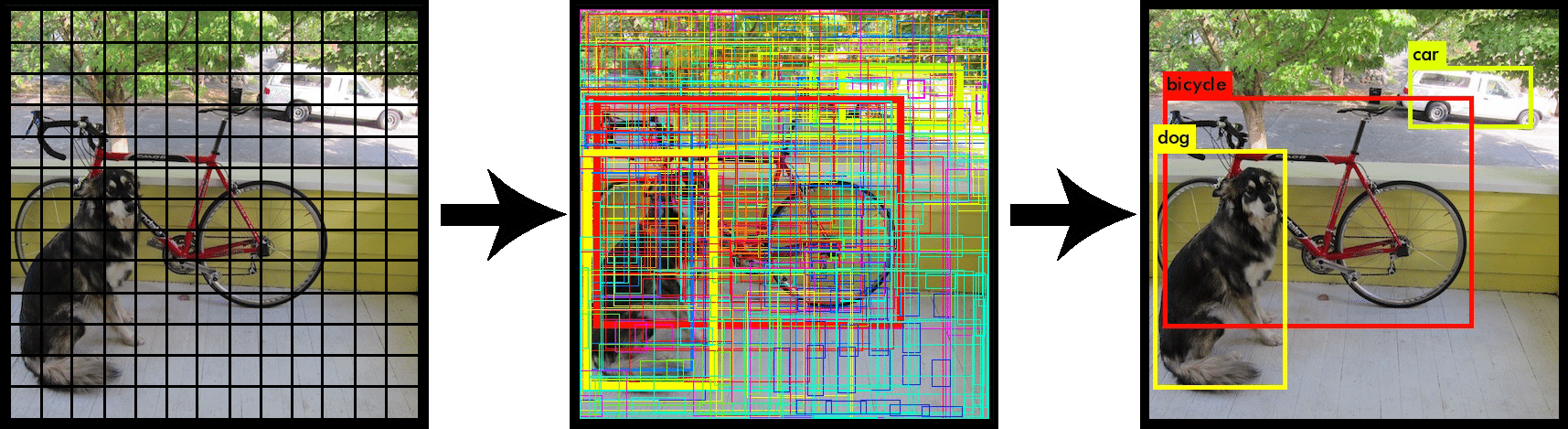

With each iteration a classifier makes a prediction of what type of object is in the window. It performs thousands of predictions per image. Because of this sliding process it works pretty slowly.

The biggest advantage of the YOLO model is how it got its name - You Only Look Once. This model divides an image into a grid of cells. Each cell makes an attempt to predict the bounding boxes with confidence scores for those boxes and class probabilities. Then the individual bounding box confidence score multiplies by a class probability map to get a final class detection score.

Illustration from the YOLO site.

Image Credit: You Only Look Once Website

Implementation

Here you can find our demo project. It provides a pre-trained TensorFlow YOLO2 model. YOLO2 can recognise 80 classes. To run this you need to install some additional dependencies that are required for demonstration purposes (the model interface requires only TensorFlow). After installation just run python eval.py and it will capture a video stream from your webcam, evaluate it, and display the results in simple window with its predictions.

The evaluation process works frame-by-frame and can take some time depending on the hardware on which it is running. On a Raspberry Pi it can take several seconds to evaluate one frame.

You may feed a video file to this script by passing the --video argument like this python eval.py --video="/path_to_video_file/". Also a video URL can be passed (tested with YouTube) python eval.py --video=”https://www.youtube.com/watch?v=hfeNyZV6Dsk”.

The script will skip frames from the camera during evaluation and take the next available frame when the previous evaluation step has completed. For recorded video it won't skip any frames. For most purposes it’s ok to skip some frames to keep the process running in real-time.

IoT Integration

And of course, it would be nice to integrate an IoT service into this project as well as to deliver the recognition results somewhere in a place where other services can have access to them.

Conclusion

As you may see there are plenty of ready-to-go open source projects to implement solutions for almost any case, you just need to use them in the right way. Of course, some modifications are required, but they are much simpler than creating a new model from scratch. The perfect thing about such tools is that they are cross-platform. We can develop a solution on a desktop PC and then use the same code with embedded linux and an ARM board. We really hope that our project will be useful for you and that you will make something clever and open source it.

Written by Igor Panteleyev, Senior Developer at DataArt.

The Most Comprehensive IoT Newsletter for Enterprises

Showcasing the highest-quality content, resources, news, and insights from the world of the Internet of Things. Subscribe to remain informed and up-to-date.

New Podcast Episode

What is Software-Defined Connectivity?

Related Articles