Reimagining Product Design in IoT, Learning by Playing Video Games, and Mathematical Understanding of Neural Networks

Reimagining Product Design in IoT, Learning by Playing Video Games, and Mathematical Understanding of Neural Networks

- Last Updated: December 2, 2024

Yitaek Hwang

- Last Updated: December 2, 2024

Reimagining Product Design in IoT

It’s no secret that smart home devices are struggling (look no further than the Revolv hub debacle). A month ago, Stacey Higginbotham published her thoughts on how Airbnb could help the smart home industry by introducing new IoT devices inside rental homes. This week, Alex Schleifer, the VP of Design at Airbnb, added his thoughts on the role — or the lack — of design on First Round Review: perhaps, IoT is suffering from a lack of quality designers.

Summary:

- Challenges to designers are threefold: weak marketing around design roles, nonstandard organizational structure relative to engineering and product dev, and fewer role models.

- To create a design-friendly organization, fuse engineering, product, and design from the onset. If Slack is the poster boy for product development, look at Pocket’s growth with Nikki Will as the Head of Design.

- Surface new tools and establish a vernacular: design teams should move as engineering and the tech industry in general move.

Takeaway: Companies so far have largely focused on the things aspect of IoT. It’s time to remind ourselves that IoT becomes useful when those things start interacting with people. Sure, data drives IoT, but in the end, how that data is processed and visualized is what matters. Quality design should be embedded early in the development process to redefine the landscape and the outlook of IoT.

Learning by Playing Video Games

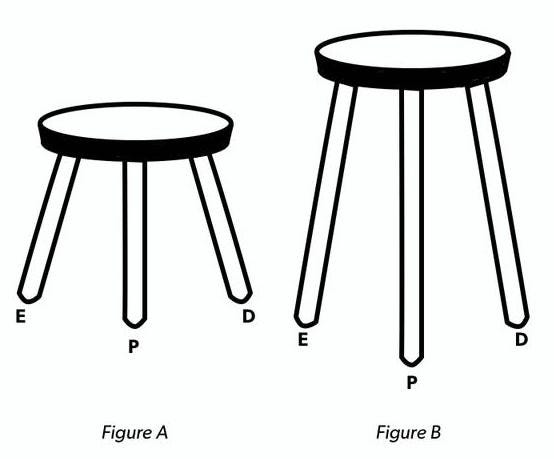

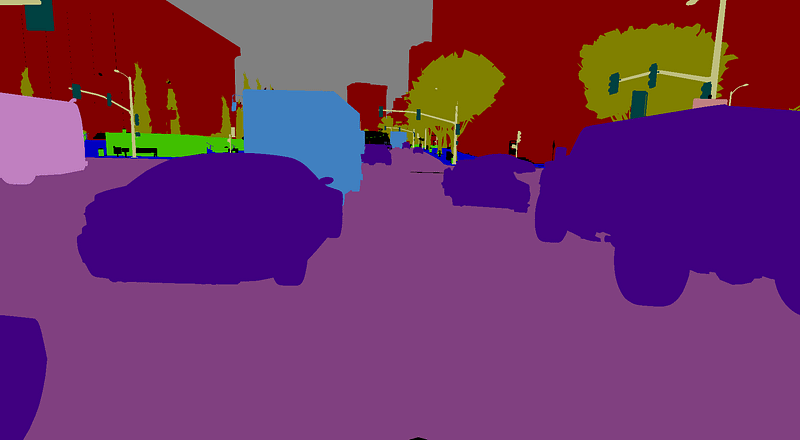

What if I told you that self-driving cars might train its algorithms by playing Grand Theft Auto? ML and big data techniques, as the name suggests, need huge amounts of data to train. We were teaching our robots with 3D simulations generated by researchers. Now, researchers from Intel Labs and Darmstadt University in Germany found ways to extract near-real life imagery and training data from off-the-shelf video games such as Grand Theft Auto.

Summary:

- AI-driven systems (e.g. self-driving cars) need big chunks of training sets, but collecting that data and labeling it to make it useful for the machine is time-intensive.

- By creating a software layer between video games and AI-training sets, researchers can scale this data collection process better by controlling all parts of synthetic data (climate, lighting, imagery, etc).

Takeaway: The research shows that synthetic data may even be superior to real-life data for training AI machines. This opens up new possibilities in tackling problems that were unaddressed due to barriers in collecting quality data. I see huge potentials in the medical field where medical devices and pharmaceutical companies can now use simulated data to speed up the development process.

Quote of the Week

“For reasons that are still not fully understood, our universe can be accurately described by polynomial Hamiltonians of low order.”

- Henry Lin at Harvard University and Max Tegmark at MIT.

Significant advances in artificial intelligence in recent years can be attributed to deep neural networks. Despite its success, no one really knew why neural networks were effective in solving complex problems. Lin and Tegmark now explains laws of physics possess properties such that neural networks don’t need to learn infinite number of possible mathematical functions, but only a tiny subset. This new insight will allow mathematicians to key in on specific functions to improve the performance of deep neural networks (MIT).

The Rundown

- Review of popular chatbot across platforms (Chatbots Magazine)

- Colors used by the ten most popular sites (Paul Hebert)

- Notes of designing better forms (Medium)

- Designing UI for VR at Disney (Medium)

Resources

- Angular 2.0 Final Release is live

- Vim 8.0 Released on Github

- CSS 3 Cool Examples

- Emacs 25.1 Released

The Most Comprehensive IoT Newsletter for Enterprises

Showcasing the highest-quality content, resources, news, and insights from the world of the Internet of Things. Subscribe to remain informed and up-to-date.

New Podcast Episode

What is Software-Defined Connectivity?

Related Articles