Small Dataset-Based Object Detection

Small Dataset-Based Object Detection

- Last Updated: December 2, 2024

MobiDev

- Last Updated: December 2, 2024

Getting started with any machine learning project often starts with the question: “How much data is enough?” The answer depends on a number of factors such as the diversity of production data, the availability of open-source datasets, and the expected performance of the system; the list can go on for quite a while. In this article, we’d like to debunk a popular myth about machines only learning from large amounts of data, and share a use case of applying ML with a small dataset.

With the rapid adoption of deep learning in computer vision, there are a growing number of diverse tasks that need to be solved with the help of machines. To understand these small dataset machine learning applications in the real world, let’s focus on the task of object detection.

Using advances in object detection algorithms, even a modest dataset can train AI to identify a target with high confidence and replace humans in dangerous or time-consuming tasks.

What is Object Detection?

Object detection is a branch of computer vision that deals with identifying and locating objects in a photo or video. The goal of object detection is to find objects with certain characteristics in a digital image or video with the help of machine learning. Often, object detection is a preliminary step for item recognition: first we have to identify objects, and only then can we apply recognition models to identify certain elements.

Object Detection Business Use Cases

Object detection is a core task of AI-powered solutions for tasks such as visual inspection, warehouse automation, inventory management, security, and more. Below are some object detection use cases that are successfully implemented across industries.

Manufacturing

From quality assurance and inventory management to sorting and assembly, object detection plays an important role in the automation of many manufacturing processes. Machine learning algorithms allow the system to quickly detect any defects, or automatically count and locate objects. These algorithms allow them to improve inventory accuracy by minimizing human error and the time spent checking and sorting these objects.

Automotive Services

Machine learning is used in self-driving cars, pedestrian detection, and optimizing traffic flow in cities. Object detection is used to perceive vehicles and obstacles in the immediate vicinity the driver. In transportation, object recognition is used to detect and count vehicles. It’s also used for traffic analysis and helps detect cars that have stopped on highways or crossroads.

Retail

Object detection helps detect SKUs (Stock Keeping Units) by analyzing and comparing shelf images with the ideal state. Сomputer vision techniques integrated into hardware help reduce waiting time in retail stores, track the way customers interact with products, and automate delivery.

Healthcare

Object detection is used for studying medical images like CT scans, MRIs, and X-rays. It’s also used in cancer screening in order to help identify high-risk patients, detect abnormalities, and even provide surgical assistance. Applying object detection and recognition to assist with medical examinations for telehealth is a new trend set to change the way healthcare is delivered to patients.

Safety and surveillance

Among the applications of object detection are video surveillance systems capable of people detection and facial recognition. Using machine learning algorithms, such systems are designed for biometric authentication and remote surveillance. This technology has even been used for suicide prevention.

Logistics and warehouse automation

Object detection models are capable of visually inspecting products for defect detection, as well as inventory management, quality control, and automation of supply chain management. AI-powered logistics solutions use object detection models instead of barcode detection, thus replacing manual scanning.

How to Develop an Object Detection System: the PoC Approach

Developing an object detection system to be used for tasks such as the ones mentioned above is no different than any other ML project. It typically starts with building a hypothesis to be checked during several rounds of experimentation.

Such a hypothesis is a part of the Proof of Concept (PoC) approach in software development. It aligns with machine learning, as in this case, the delivery is not an end product. Conducting research allows us to come up with results that will allow us to determine that either the chosen approach could be used, or that there’s a need to run extra experiments to choose a different direction.

If the question is “how much data is enough for machine learning”, the hypothesis may be an initial statement such as “150 data samples are enough for the model to reach an optimal level of performance.”

Experienced ML practitioners such as Andrew Ng (co-founder of Google Brain and ex-chief scientist at Baidu) recommend quickly building the first iteration of the system with machine learning functionality, then deploying it and iterating from there.

This approach allows us to create a functional and scalable prototype system that can be upgraded with the data and feedback from the production team. This solution is far more efficient when compared to the prospect of trying to build the final system from the get-go. A prototype of this nature does not necessarily require large amounts of data.

To answer the question of “how much data is enough,” it’s undeniably true that no machine learning expert can predict exactly how much data is needed. The only way to find out is to establish a hypothesis and test it under real-world conditions. This is exactly what we’ve done with the following object detection example.

Case Study: Object Detection Using Small Dataset for Automated Items Counting in Logistics

Our goal was to create a system capable of detecting objects for logistics. Transportation of goods from production to warehouse or from warehouse to facilities often requires intermediate control and coordination of the actual quantity using invoices and a database. If performed manually, this task would require hours of human work and would involve high risk of loss, damage, or injury.

Our initial hypothesis was that a small annotated dataset would be sufficient to address the issue of automatically counting various items for logistics purposes.

The traditional approach to the problem that many would take is to use classic computer vision techniques. For instance, one might combine a Sobel filter edge detection algorithm with Hough circle transform methods to detect and count round objects. This method is simple and relatively reliable; however, it is more suitable for a controlled environment, such as a production line which produces objects that have a well-defined round or oval shape.

In the use case we selected, the classical methods are far less reliable since the shape of the objects, quality of the images, and lighting conditions can all vary greatly. Furthermore, these classical methods cannot learn from the data collected. This makes it difficult to refine the system by collecting more data. In this case, the best option would be to instead fine-tune a neural network-based object detector.

Data collection and labeling

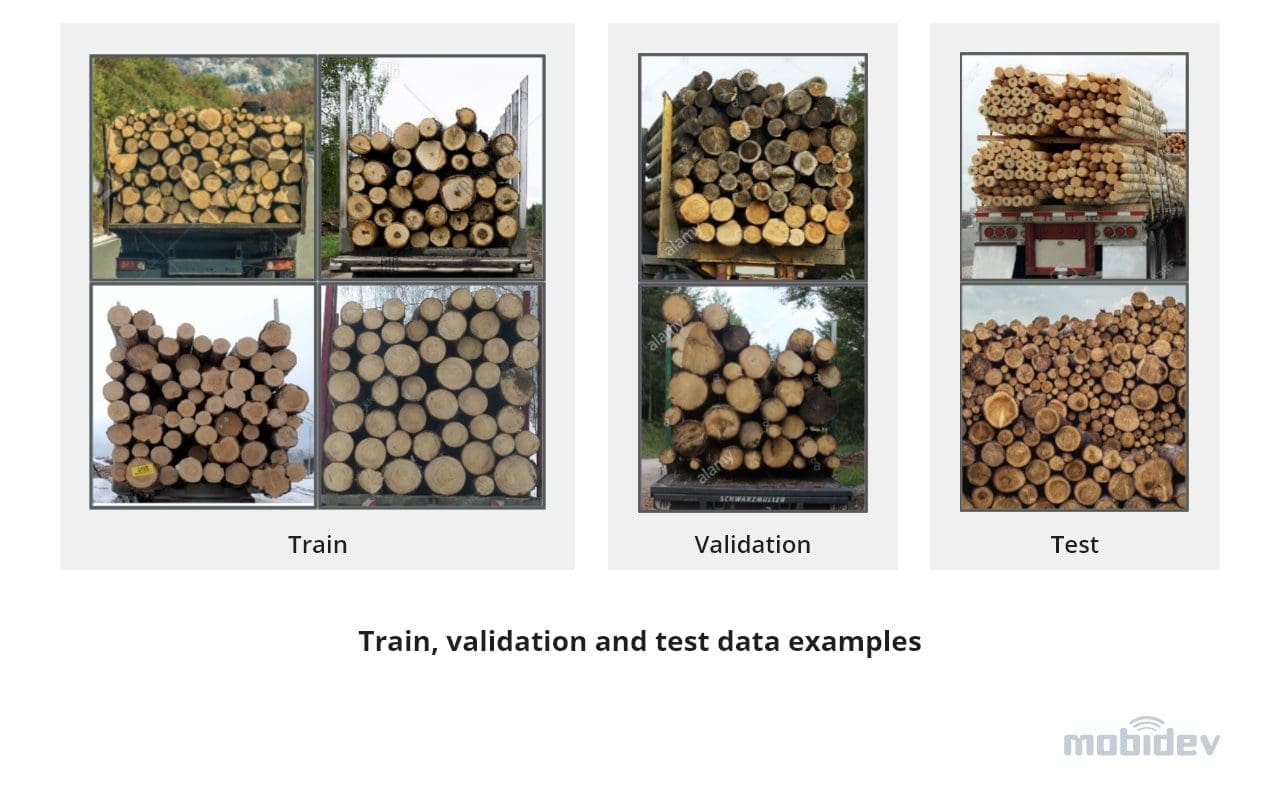

To perform an experiment of object detection with a small dataset, we collected and manually annotated several images available via public sources. We decided to focus on the detection of wood logs, and divided the annotated images into train and validation splits.

We additionally gathered a set of test images without labels where the logs would be in some way different from the train and validation images (orientation, size, shape, or color of logs) to see where the limits to the model’s detection capabilities lie for the given train set.

Source: MobiDev

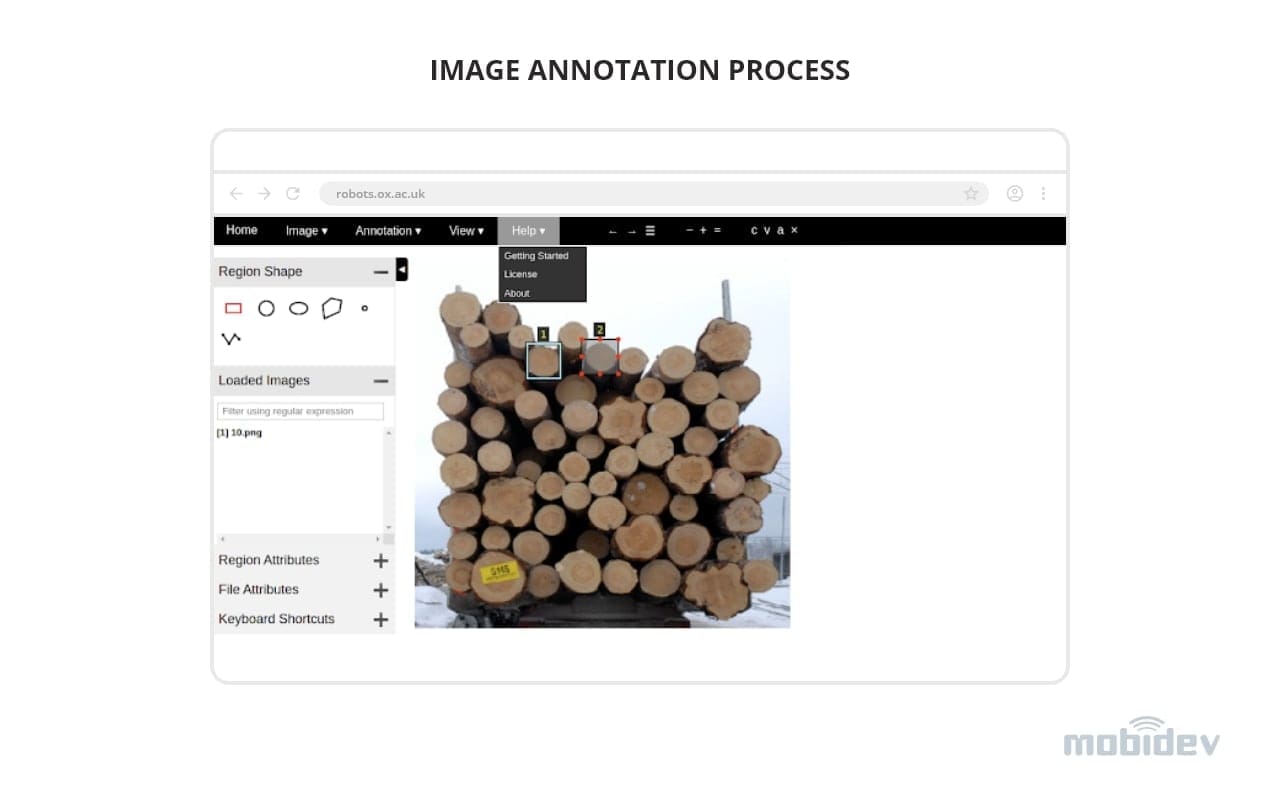

Since we are dealing with object detection, image annotations are represented as bounding boxes. To create them, we used an open-source browser-based tool, VGG Image Annotator, which has sufficient functionality for creating a small-scale dataset. Unfortunately, the tool produces annotations in its own format which we then converted to the COCO object detection standard.

Source: MobiDev

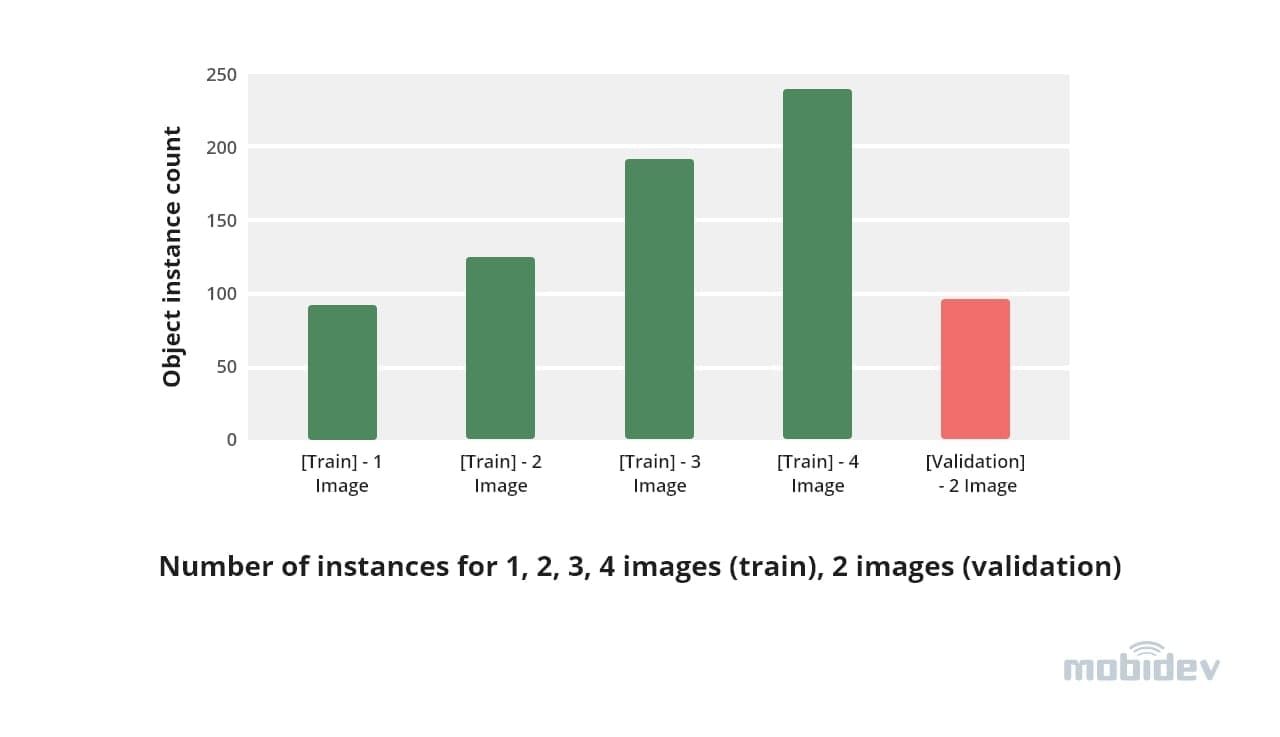

In object detection, the quantity of data is determined not just by the number of images in the dataset, but also by the quantity of individual object instances in each image. In our case, the images were quite densely packed with objects – the number of instances reached 50-90 per image.

Source: MobiDev

Detectron2 Object Detection

The model we decided to use was an implementation of Faster R-CNN by Facebook in the computer vision library Detectron2.

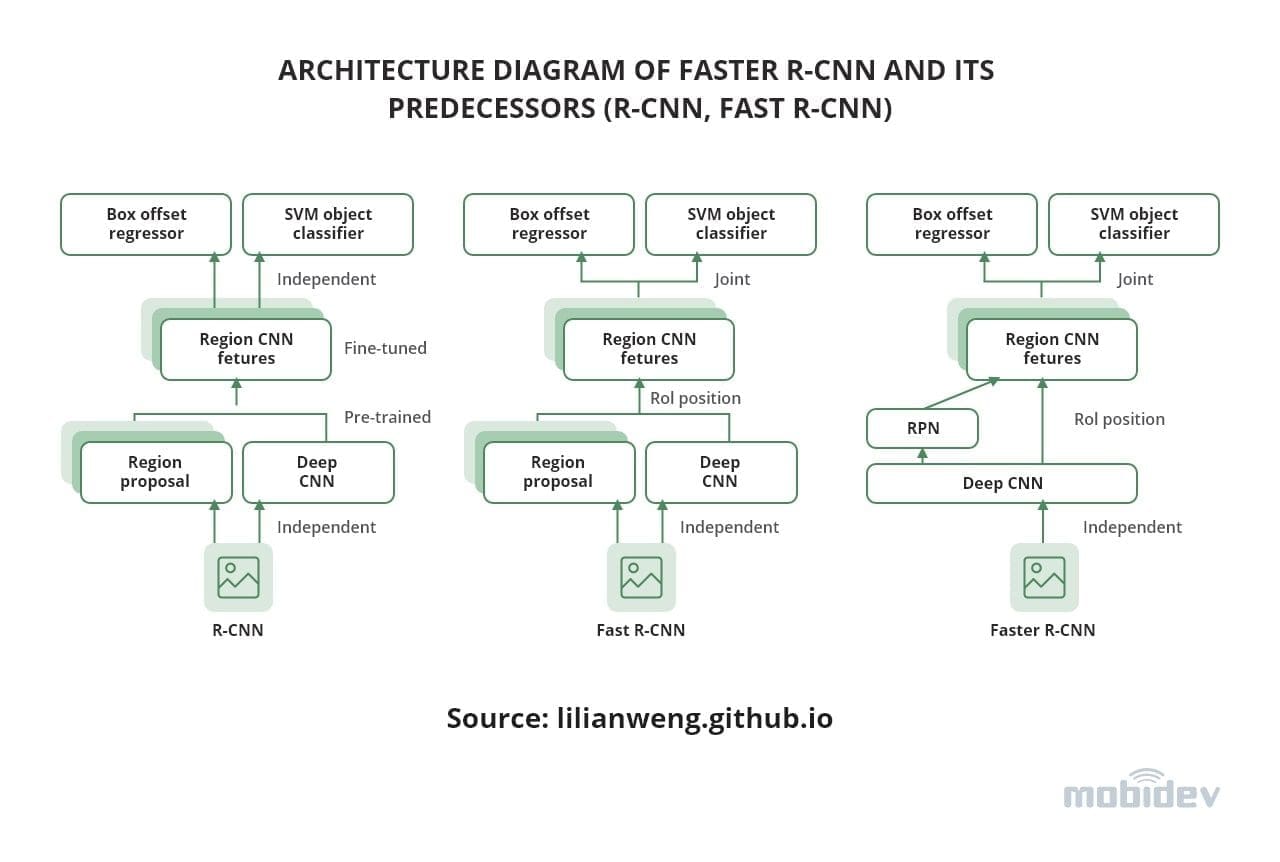

Let’s have a closer look at how Faster R-CNN works for object detection. First, an input image is passed through backbone (a deep CNN model pre-trained to an image classification problem) and is converted into a compressed representation called a feature map. Feature maps are then processed by the Region Proposal Network (RPN) that identifies areas in the feature maps that are likely to contain an object of interest.

Next, the areas are extracted from the feature maps using the RoI pooling operation and processed by bounding box offset head (which predicts accurate bounding box coordinates for each region) and object classification head (which predicts the class of the object in the region).

Faster R-CNN (Region-based Convolutional Neural Network) is the 3rd iteration of R-CNN architecture.

Source: lilianweng.github.io

Faster R-CNN is a two-stage object detection model. It includes the RPN sub-network to sample object proposals. However, this is not the only solution to the small dataset for object detection.

There are also one-stage detector models attempting to find the relevant objects without this region proposal screening stage. One-stage detectors have simpler architectures, and are typically faster but less accurate compared to two-stage models. Examples include the Yolov4 and Yolov5 architectures, – some of the lighter configured models from these families can reach up to 50-140 FPS (although compromising the detection quality), in comparison to Faster R-CNN which runs at 15-25 FPS at maximum.

The original paper on Faster R-CNN explained was published in 2016 and received some small improvements to the architecture over time, which were reflected in the Detectron2 library that we used.

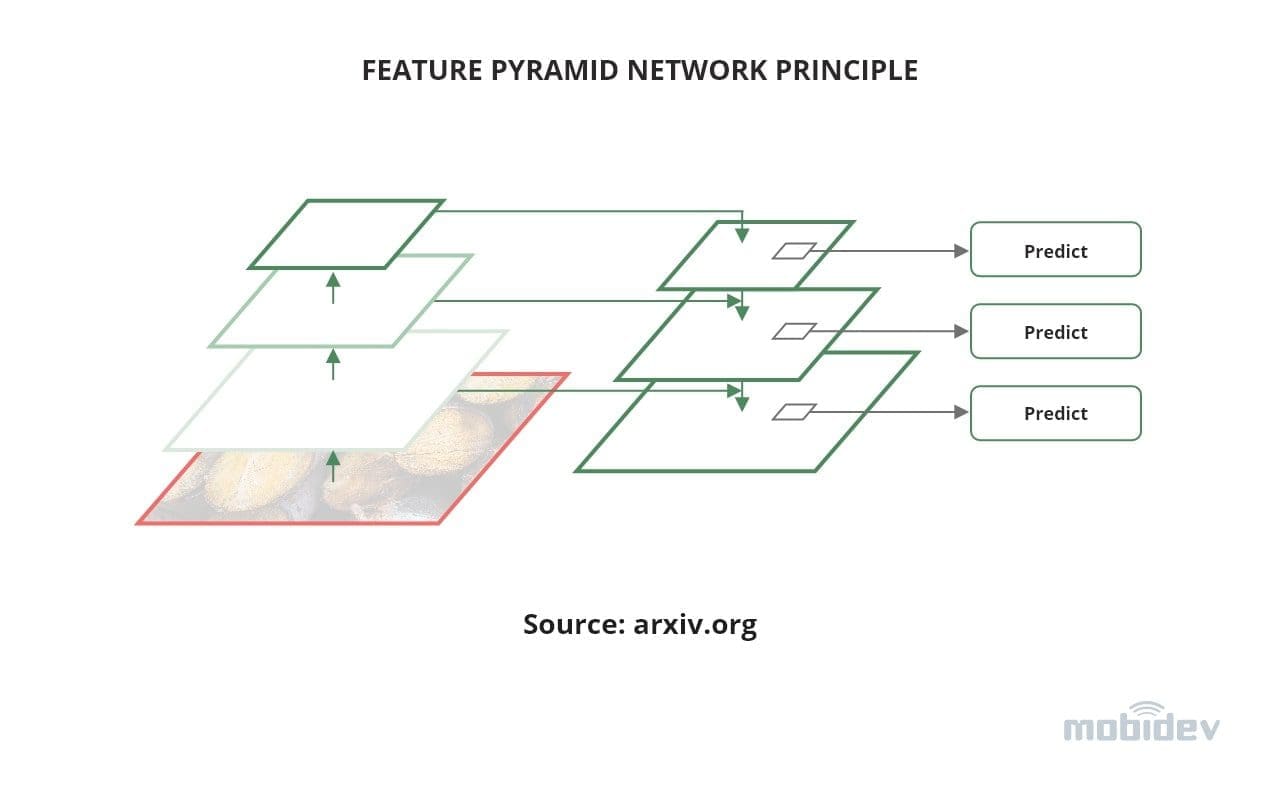

For example, the model configuration selected for our experiments, R50-FPN, uses backbone ResNet-50 with Feature Pyramid Network – a concept that was introduced in the CVPR 2017 paper and has since become a staple of CNN backbones for feature extraction. In simpler terms, in Feature Pyramid Networks we use are not limited to the deepest feature maps extracted from CNN but also include low- and medium-level feature maps. This allows small object detection that would be otherwise lost during the compression down to the deepest levels.

Source: arxiv.org

Results

In our experiments, we used the following methodology:

- Take a Faster R-CNN instance pre-trained on COCO 2017 dataset with 80 object classes.

- Replace 320 units in bounding box regression and 80 units in classification heads with 4 and 1 units respectively, in order to train the model for 1 novel class (bounding box regression head has 4 units for each class in order to regress X, Y, W, H dimensions of bounding box where X, Y are the center coords of the bbox center and W, H are its width and height).

After some preliminary runs we picked the following training parameters:

- Model config: R50-FPN

- Learning rate: 0.000125

- Batch size: 2

- Batch size for RoI heads: 128

- Max iterations: 200

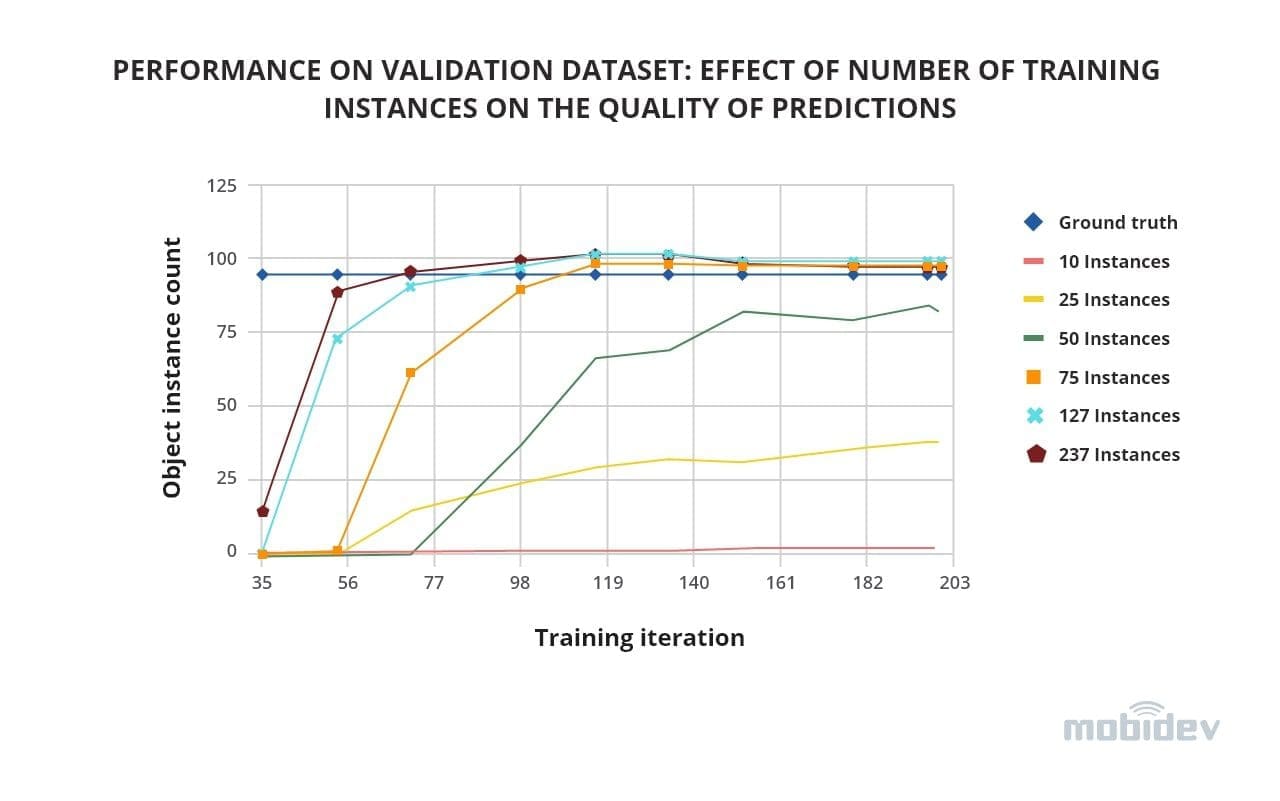

With the parameters set, we started looking into the most interesting aspect of training: how many training instances were needed to obtain decent results on the validation set. Since even 1 image contained up to 90 instances, we had to randomly remove part of the annotations to test a smaller number of instances. What we discovered was that for our validation set with 98 instances, at 10 training instances we could pick up only 1-2 test instances, at 25 we already got approximately 40, and at 75 and higher we were able to predict all the instances.

Increasing the number of training instances from 75 to 100 and 200 led to the same final training results. However the model converged faster due to the higher diversity of the training examples.

Source: MobiDev

Predictions of the model trained with 237 instances on image from validation set can be seen in the image below; there are several false positives (indicated by red arrows) but they have low confidence and thus could be mostly filtered out by setting the confidence threshold at ~80%.

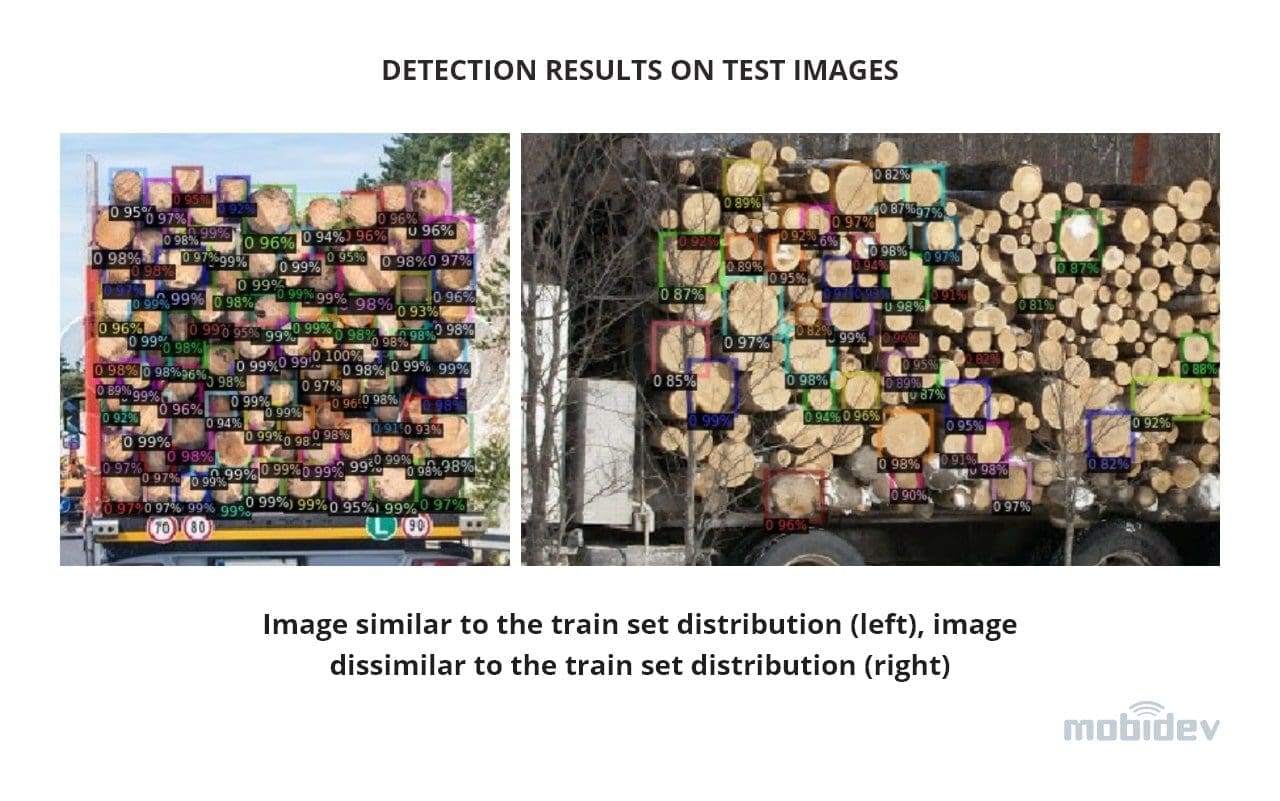

In the next step, we explored the performance of the trained model on test images without labels. As expected, images similar to the training set distribution had confident and high quality predictions, whereas the images where the logs had and unusual shape, color, or orientation were much tougher for the model to work with.

Source: MobiDev

However, even on the challenging images from the test set we observed a positive effect from increasing the number of training instances. In the image below we show how the model learns to pick up additional instances (marked by green stars) with the increase in the number of train images (1 train image – 91 instances, 2-4 images – 127-237 instances).

Source: MobiDev

To sum up, the results showed that the model was able to pick up ~95% of the instances in the validation dataset. After fine-tuning with 75-200 object instances provided validation data resembled the train data. This proves that selecting proper training examples makes quality object detection possible in a limited data scenario.

Future of Object Detection

Object detection is one of the most commonly used computer vision technologies that has emerged in recent years. The reason for this is primarily versatility. Some of the existing models are successfully implemented in consumer electronics or integrated in driver-assistance software, while others are the basis for robotic solutions used to automate logistics and transform the healthcare and manufacturing industries.

The task of object detection is essential for digital transformation, as it serves as a basis for AI-driven software and robotics, which in the long run will enable us to free people from performing tedious jobs and mitigate multiple risks.

The Most Comprehensive IoT Newsletter for Enterprises

Showcasing the highest-quality content, resources, news, and insights from the world of the Internet of Things. Subscribe to remain informed and up-to-date.

New Podcast Episode

Moving Past the Pilot Phase in IoT and AI

Related Articles