Using GPUs for Deep Learning

Using GPUs for Deep Learning

- Last Updated: December 2, 2024

MobiDev

- Last Updated: December 2, 2024

As AI technology advances and more organizations are implementing machine learning operations (MLOps), companies are looking for ways to speed up processes. This is especially true for organizations working with deep learning (DL) methods which can be incredibly time-intensive to run. This process can be sped up using graphical processing units (GPUs), either on-premises or in the cloud.

GPUs are microprocessors specially designed to perform graphics-oriented tasks. These units utilize parallel processing of tasks and can be optimized to increase performance in artificial intelligence and deep learning processes.

The GPU is a powerful tool for speeding up a data pipeline in combination with a deep neural network. There are principally two reasons to integrate a GPU into data processing. The first is that a deep neural network (DNN) inference runs up to 10 times faster on a GPU compared to a central processing unit (CPU) that retails for the same price. The second reason is that it allows the CPU to do more work simultaneously and reduces the overall network load by taking some of the workloads off of the CPU.

The GPU is a powerful tool for speeding up a data pipeline in combination with a deep neural network. Both on-site and cloud solutions offer their own unique advantages in DNN solutions.

A typical deep learning pipeline that utilizes a GPU consists of:

- Data preprocessing (handled by the CPU)

- DNN training or inference (handled by the GPU)

- Data post-processing (again handled by the CPU)

The most common bottleneck with this approach is data transfer between CPU RAM and GPU DRAM. Therefore there are two main focuses when building a data science pipeline architecture. The first is to reduce the number of data transference transactions by aggregating several samples (images) into a batch. The second is to reduce the size of a specific sample by filtering data before transferring.

The training and implementation of DL models require deep neural networks (DNN) and datasets with hundreds of thousands of data points. These DNNs need significant resources, including memory, storage, and processing power. While central processing units (CPUs) can provide this power, graphical processing units (GPUs) can substantially expedite the process.

The Main Benefits of Using GPU for Deep Learning

There are three significant advantages a dedicated GPU brings to a system implementing a DNN:

- Number of cores—GPUs typically have a large number of cores, can be clustered together, and can be combined with CPUs. This enables you to significantly increase processing power within a system.

- Higher memory bandwidth—GPUs can offer higher memory bandwidth than CPUs (up to 750GB/s vs 50GB/s). This enables them to more easily transfer the large amounts of data required for expedient deep learning.

- Flexibility—a GPU's capacity for parallelism enables you to combine GPUs in clusters and distribute tasks across those clusters. Alternatively, you can use GPUs individually with clusters assigned to the training of individual algorithms.

When Not to Use GPUs for Deep Learning Tasks

GPU calculations are high-speed compared to a CPU's, and GPUs are a must-have component for many AI applications. But in some cases, this processing power is overkill, and system architects should consider utilizing CPUs to minimize strain on their budget.

Here we also need to say a few words about the cost of GPU calculations. As mentioned before, GPUs perform their calculations significantly faster than CPUs. Still, the combined time costs of transferring and processing the data may outweigh the speed you gained by switching to GPU.

So, for example, at the beginning of development, i.e., while developing proof of concept (POC) or a minimum viable product (MVP), one could use CPUs for preliminary activities like development, testing, and staging servers. In cases where users are okay with longer response times, production servers can use the CPU for DNN training and inference, but ideally only for tasks with a short overall duration.

On-Premises GPU Options for Deep Learning

When using GPUs for on-premises implementations, multiple vendor options are available. Two of the most popular choices are NVIDIA and AMD.

NVIDIA

NVIDIA is a popular option because of the first-party libraries it provides, known as the CUDA toolkit. These libraries enable the easy establishment of deep learning processes and form the base of a strong machine learning community using NVIDIA products. This is evidenced by the widespread support that many DL libraries and frameworks provide for NVIDIA hardware.

In addition to supporting their GPU hardware, NVIDIA also offers libraries supporting popular DL frameworks, including PyTorch. The Apex library is particularly useful and includes several fast, fused optimizers such as FusedAdam.

The downside of a system integrating NVIDIA GPUs is that the company has recently placed restrictions on the configurations in which CUDA can be used. These restrictions require that the libraries only be used with the Tesla line of GPUs and cannot be used with the less expensive RTX or GTX hardware lines. This has had serious budget implications for organizations training DL models; it is also problematic when considering that although Tesla GPUs do not offer significantly more performance than the other options, the units cost up to 10x as much.

AMD

AMD also provides a suite of first-party libraries, known as ROCm. These libraries are supported by TensorFlow and PyTorch, as well as all significant network architectures. Support for the development of new networks is limited, however, as is community support.

Another issue with utilizing AMD GPUs is that AMD does not invest as much into its DL software as NVIDIA. Because of this, AMD GPUs provide limited functionality compared to NVIDIA outside of their lower price points.

Cloud Computing with GPUs

Another option growing in popularity with organizations training DL models is the use of cloud resources. These resources can provide pay-for-use access to GPUs in combination with optimized machine learning services. Of the significant providers of cloud computing resources (Microsoft Azure, AWS, and Google Cloud), all three offer GPU resources along with a host of configuration options:

Microsoft Azure

Microsoft Azure grants a variety of instance options for GPU access. These instances have been optimized for high computation tasks, including visualization, simulations, and deep learning.

Within Azure, there are three main series of instances you can choose from:

- NC-series: Instances are optimized for network- and computing-intensive workloads. For example, CUDA and OpenCL-based simulations and applications would make use of an NC-series instance. These instances provide access to NVIDIA Tesla V100, Intel Haswell, or Intel Broadwell GPUs.

- ND-series: Instances are optimized for inference and training scenarios for deep learning. These instances provide access to NVIDIA Tesla P40, Intel Broadwell, or Intel Skylake GPUs.

- NV-series: Instances are optimized for virtual desktop infrastructures, streaming, encoding, and visualizations. NV-series instances specifically support DirectX and OpenGL. Instances provide access to NVIDIA Tesla M60 or AMD Radeon Instinct MI25 GPUs.

Amazon Web Services (AWS)

In AWS, there are four different options depending on the level of processing power and memory required, each with various instance sizes. Options include EC2 P3, P2, G4, and G3 instances. These options enable you to choose between NVIDIA Tesla V100, K80, T4 Tensor, or M60 GPUs. You can scale up to 16 GPUs depending on the instance.

To enhance these instances, AWS also offers Amazon Elastic Graphics, a service that enables you to attach low-cost GPU options to your EC2 models. This service allows you to use GPUs with any compatible instance as needed and thus provides greater flexibility for your workloads. Elastic Graphics provides support for OpenGL 4.3 and can offer up to 8GB of graphics-specific memory.

Google Cloud

Rather than dedicated GPU instances, Google Cloud enables you to attach GPUs to your existing instances. For example, if you use Google Kubernetes Engine, you can create node pools with access to a range of GPUs. These include NVIDIA Tesla K80, P100, P4, V100, and T4 GPUs.

Google Cloud also offers the TensorFlow processing unit (TPU). This specialized unit includes multiple GPUs designed for performing fast matrix multiplication. It provides similar performance to Tesla V100 instances with Tensor Cores enabled. The main benefit of using the TPU is that it can offer cost savings through parallelization.

Each TPU is the equivalent of four GPUs, enabling comparatively larger deployments. Additionally, these TPUs are now at least partially supported by PyTorch.

What Is the Best GPU for Deep Learning Tasks in 2021?

When the time comes to select an infrastructure, a decision needs to be made between an on-premises and a cloud approach.

Cloud resources can significantly lower the financial barrier to building a DL infrastructure, and these services can also provide scalability and provider support. However, these infrastructures are best suited for short-term projects since consistent resource use can cause costs to balloon.

In contrast, on-premises infrastructures are more expensive upfront but provide greater flexibility. The hardware can be used for as many experiments as necessary over the useful lifetime of the components, all with stable costs. Developers opting to use on-premises configurations also retain complete control over their configurations, security, and data.

For organizations that are just getting started, cloud infrastructures generally make more sense. These deployments enable you to start running with minimal upfront investment and can give you time to refine your processes and requirements. However, once the operation grows large enough, switching to on-premises could be the wiser choice.

Using a GPU for AI Training

Because GPUs are specialized processors with an instruction set focused on rapid graphics processing, developers of a deep learning application face a problem of translation between the task they are using the GPU for and its intended purpose. Traditional deep learning frameworks like Tensorflow, Pytorch, or ONNX thus cannot directly access GPU cores to solve deep learning problems on them. Instead, these tasks need to be translated through several layers of unique software designed specifically for this task, like CUDA and drivers for the GPU.

While the generic schema covers the minimum layers of software needed to use GPU cores, some implementations that use the aforementioned cloud computing services may introduce additional components between the AI application and CUDA layers.

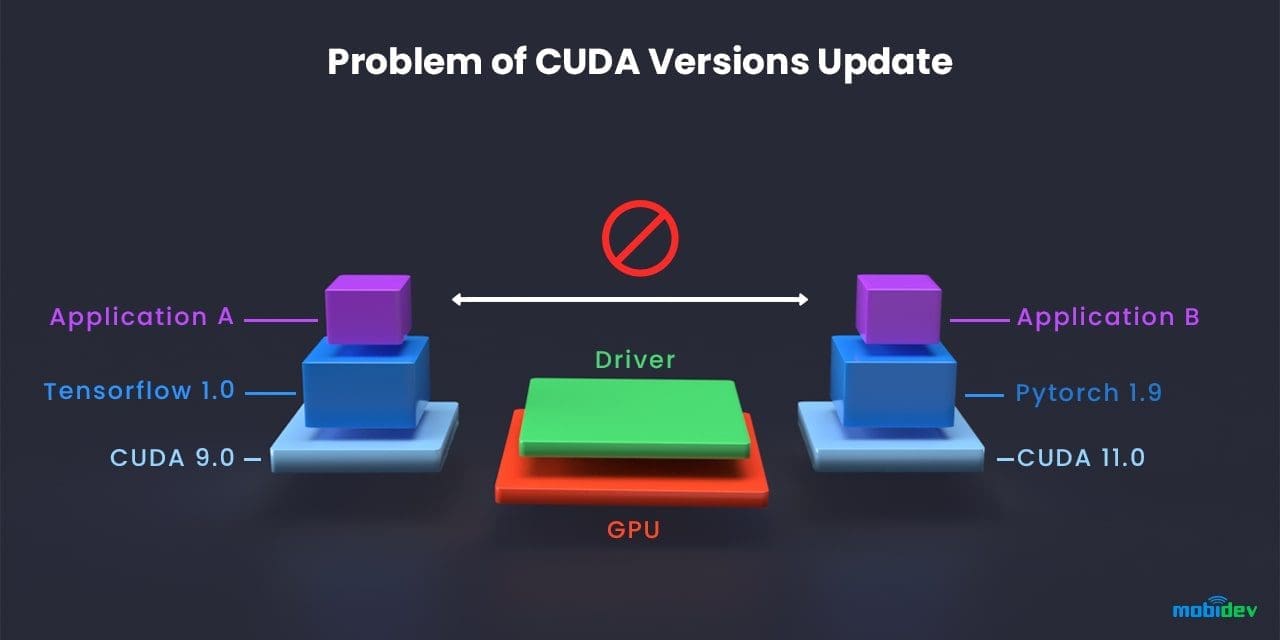

But in real-world AI software development, new versions of AI applications, AI frameworks, CUDA, and GPU drivers often emerge. These new versions may introduce compatibility issues between the rest of the development environment and the updated component that become difficult to resolve.

For example, a security vulnerability may necessitate using a new version of an AI framework that is not compatible with the current version of CUDA on the computation service. This then raises the question: should CUDA be updated to fix the compatibility clash that the new AI framework introduced? Other existing projects which use a different AI framework but the same underlying translation layers rely on the previous version of CUDA for their application, so forcing multiple projects to align their development system stack to any single project's needs is an untenable solution.

Source: MobiDev

In short, it is not viable to have two different versions of CUDA installed simultaneously on the system in this example. The solution to this impasse is modularity in system design. By utilizing a technique known as dockerization, the system can maintain multiple instances of the higher layers of the system stack and utilize whichever one of them is appropriate for the application's current needs.

The system can use Docker and Nvidia-docker to wrap AI applications, bundling all necessary dependencies like AI Framework and the appropriate version of CUDA into a container. This approach allows the system to maintain different versions of tools like Tensorflow, Pytorch, and CUDA on the same computation service machine.

Conclusion

To advance quickly, machine learning workloads require high processing capabilities. GPUs can increase processing power compared to CPUs, along with higher memory bandwidth and a capacity for parallelism.

Developers of AI applications can use GPUs either on-premises or in the cloud. Popular on-premise GPUs include NVIDIA and AMD. Cloud-based GPUs can be provided by many cloud vendors, with the three most prominent vendors being Microsoft Azure, AWS, and Google Cloud. When choosing between on-premise and cloud GPU resources, both budget and skills should be taken into consideration.

On-premise resources typically come with a high upfront cost, offset by steady returns in the long run. However, suppose an organization does not have the necessary skills or budget to operate on-premise resources. In that case, they should consider cloud offerings, which can be easier to scale and typically come with managed options.

The Most Comprehensive IoT Newsletter for Enterprises

Showcasing the highest-quality content, resources, news, and insights from the world of the Internet of Things. Subscribe to remain informed and up-to-date.

New Podcast Episode

What is Software-Defined Connectivity?

Related Articles